Deepfake Deception: How AI-Generated Ads Are Misusing Nigerian Public Figures to Scam the Public

BY: Mustapha Lawal

Deepfakes, AI-fabricated audio and video, are now a sinister threat in Nigeria. Cloned images and voices of public figures are used in fraudulent ads, eroding public trust and harming consumer protection. Globally, AI-powered fraud is escalating, lowering the technical bar for cybercriminals. As Nigeria’s digital market expands, robust security measures are urgently needed to combat this growing threat.

In recent weeks, social media platforms like Facebook and Instagram have been flooded with suspicious advertisements featuring the familiar faces and voices of notable Nigerians, from revered religious leaders to respected journalists and even federal agencies.

These videos, which appear highly convincing at first glance, promote products like miracle cures for prostate cancer and treatments for erectile dysfunction, while others solicit public participation in auctions for impounded cars and rice allegedly handled by the Nigerian Customs Service.

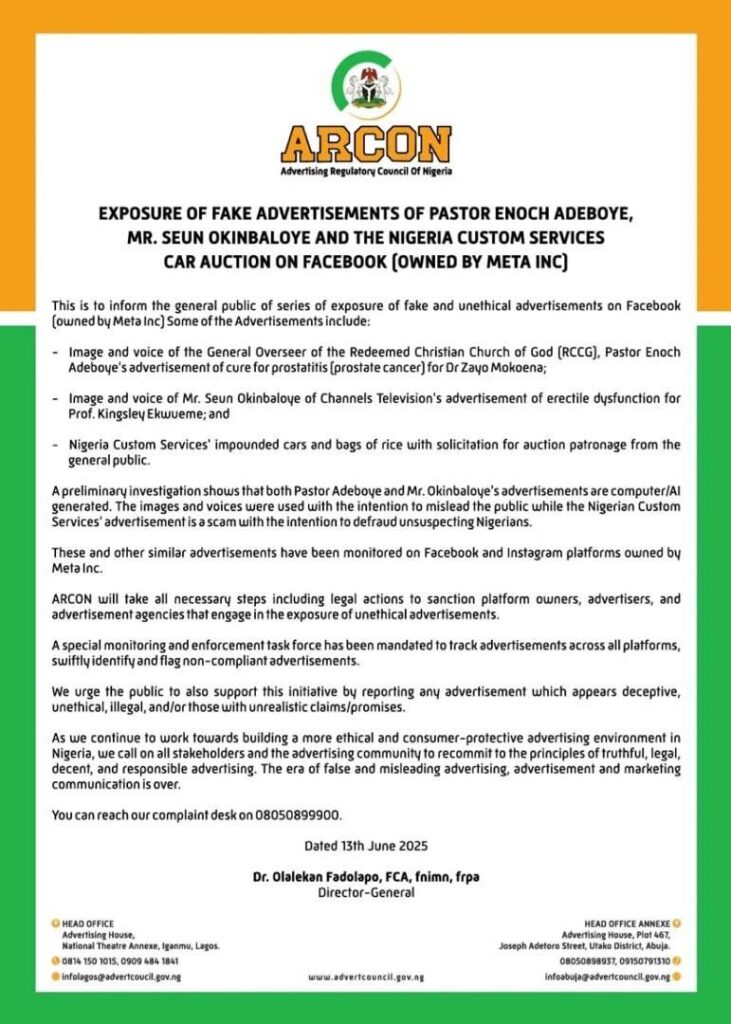

The problem? None of these advertisements are real, and the individuals or institutions being showcased have nothing to do with them. On June 13, 2025, Nigeria’s advertising watchdog, the Advertising Regulatory Council of Nigeria (ARCON), raised the alarm with a formal public notice. The statement revealed that the videos in question are AI-generated and were created without the knowledge or consent of the individuals featured.

In particular, ARCON identified a deepfake video of Pastor Enoch Adeboye, General Overseer of the Redeemed Christian Church of God (RCCG), promoting a supposed prostate cancer cure, as well as another video featuring the likeness of Seun Okinbaloye, the respected political anchor at Channels Television, appearing to endorse an erectile dysfunction product. Both cases were described as gross misrepresentations, orchestrated with the intention to deceive and defraud unsuspecting Nigerians.

The misuse of AI to clone voices and faces is part of a growing global concern around digital manipulation. The Nigeria Customs Service was also falsely implicated, with fake ads claiming that seized items, including cars and bags of rice, were being auctioned via Facebook. These kinds of scams prey on the economic vulnerabilities of Nigerians and manipulate public trust in institutions and personalities to lend credibility to false offerings.

Adding to the urgency of the matter, Seun Okinbaloye personally issued a disclaimer via his verified LinkedIn page. In his words:

“Disclaimer to AI-generated videos and promotional materials using my face and voice to market products which are not verified and unauthorised by me. I advise the general public to beware. There are efforts to stop these fraudulent efforts, and it’s very unfortunate that these platforms are allowing these frauds to perpetrate all these.”

This situation underscores a larger concern: the unchecked spread of deceptive content powered by artificial intelligence on major social media platforms. While Facebook and Instagram, both owned by Meta, have made efforts in content moderation, the circulation of such deepfakes highlights significant gaps in their content oversight systems, especially in Africa.

Unfortunately, this is not Nigeria’s first brush with AI-assisted fraud. Scammers are leveraging AI to create more convincing scams, including fake job offers, phishing attempts, and deepfakes, making them harder to detect. This includes using AI to generate realistic text, images, and even voice recordings to deceive victims.

The cost of such deception is significant. Financially, it leads to real losses for Nigerians, many of whom are already economically vulnerable. Socially, it erodes trust in institutions and public figures. Psychologically, it breeds fear and confusion, especially when the content is tied to health, religion, or security. In a country where millions still rely on word-of-mouth and forwarded messages via WhatsApp and Facebook as primary sources of information, the risks are amplified.

Conclusion

The misuse of AI in deceptive advertising is no longer a distant threat, it’s already here. As public figures become targets of AI-generated impersonations, it’s clear that the battle against misinformation has entered a new, more complex phase and the line between what is real and what is manipulated is becoming harder to detect. This makes digital literacy and regulatory oversight more important than ever. While AI holds potential for innovation, its misuse, particularly in the realm of advertising, poses a serious threat to consumer protection and public trust. Nigerians, like citizens everywhere, must remain vigilant in an era of persuasive fakes and fabricated endorsements.